Machine Learning Articles of the Week: Learning Object Detectors from Scenes, Exploring Emojis on Instagram, A Tutorial on Dynamical Systems on Networks, and more

Object Detectors Emerge In Deep Scene CNNs

ImageNet is the rage with CNNs. These authors show that a byproduct of training to recognize scenes is object detection which is more reliable than other work performed with ImageNet without any supervision for learning object detectors. The author suggests the network hierarchy follows edges, texture, objects, scenes which is kind of neat because it reflects early work in CV, such as David Marr’s “primal sketch” hoping to create similar levels of hierarchy.

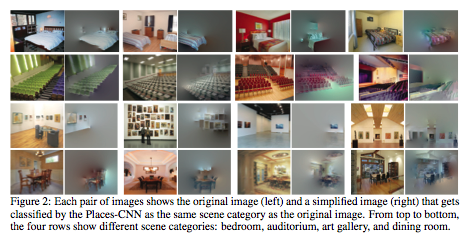

The authors have a couple interesting approaches discussed, like using a type of selective whitening they call scene simplification to improve training data by iteratively removing components that do not affect score. Several of these are pictured below:

Dynamical Systems on Networks: A Tutorial

I haven’t finished reading this yet but it is a great tutorial on analytically tractable dynamical systems applied to networks, from social contagions and voter models to coupled oscillators.

Profiling Top Kagglers: KazAnova Currently #2 in the World

This is a quick interview with Marios Michailidis, one of the highest performing Kaggler in the world on some of his methods to success. The most important features to when seeing a new dataset is to understand the problem, create a metric, set up reliable cross validation consistent with the leaderboard, understand the family of algorithmic approaches and where they could be useful, and iterate and test frequently.

Emojineering Part 1: Machine Learning for Emoji Trends

Emoji usage has increased over time. This is a light read analyzing Instagram’s users for the increasing use of Emoji. They then found what hashtags are associated commonly with each emoji, learning a word2vec vector space, which was then used for visualization and analysis purposes:

Becoming a Bayesian

Curious about Bayesian Machine Learning? I really liked this read because it is one of the more honest reads on Bayesian Machine Learning; many people gloss over the difficulties that arise in practice.

The author discusses his journey from traditional approaches to thinking “about a probabilistic model that relates observables to quantities of interest and of suitable prior distributions for any unknowns that are present in this model.” A second part of the post is available where he delves into the delicate dance between approximate models to provide computationally tractable approaches and rigorously pure Bayesian models where modeling is performed independently of the inference procedure.